It would be really nice to have sampling rates with an integer number of samples per nominal line cycle for North American locations e.g. 6kHz or 1.2kHz. Maybe a check box to switch between normal 1x/5x and 1.2x/6x would avoid cluttering the sample rate list. Being able to manually enter arbitrary rates would also allow this.

This isn’t just about interference from power lines, but also lighting (which usually has 2x the rate or 120 Hz). Power lines should be the more general case, so that should be sufficient to handle lighting related measurements too.

The main advantage of this is that averaging with an integer number of samples can then be done, which is a LOT easier and faster than averaging across a fractional number of samples for measurements that are impacted by the AC power.

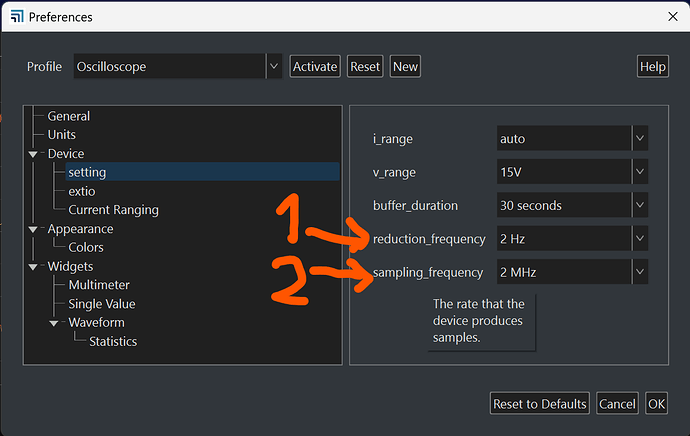

Hi @Jeremy - Thanks for the feature request! I definitely understand the desire for the multimeter and single value widget displays to have a number of power line cyles (NPC) setting, which it does not have currently. In the Joulescope UI terminology, this is reduction_frequency, soon to be renamed to statistics frequency. I am not sure if you mean this or sampling_frequency.

I have some questions:

- Are you talking about

reduction_frequency(1) which affects the update rate for the Multimeter Widget and Single Value Widget or aboutsampling_frequency(2) which affects the Waveform Widget?

- Do you care about the Joulescope UI, python scripts, or both?

- If you are talking about sampling_frequency and the Waveform Widget, would you need additional tools, such as “snap to NPC”, to make this useful?

Hi Matt, These sampling rates are more for the oscilloscope/Waveform Widget and exported data than for the mult-meter or single display, though it might be helpful there too.

- I’m interested in additional sampling_frequency values (2). I leave the reduction frequency at 2 Hz, and I doubt I could read anything faster than 10Hz.

- The Joulescope UI is my main interest right now. I am starting to dabble in the python scripts, but averaging over fractional samples isn’t intractable in python.

- I would not need “snap to NPC”. It seems like a good idea that would be nice, but isn’t essential for me.

Hi @Jeremy - I still don’t think I understand how having these sampling rates help with the Joulescope UI Waveform Widget. First, both the Joulescope JS110 and JS220 sample at 2 Msps, always. The JS220 then internally downsamples to 1 Msps to keep the USB data rate sane since it transfers float32 rather than native samples to the host. The sampling_frequency setting is actually downsampling from that native sample rate.

Today, you can sample at 1 Msps and add dual markers over n/60 Hz = n * 16.67 ms. The total time error, even without interpolation, is ±1 µs max (±0.5 µs typical) error at 1 Msps => 0.006%.

Even if the sampling rate was 6000 Hz, you would have to add dual markers for the correct interval, unless we add a way to snap dual marker duration to NPC.

From your original question:

- Are you saying that the ±1 µs max error = 0.006% at 1 Msps is too much?

- Are you wanting to downsample for reduced RAM and/or JLS file storage, and the error at the selected sample rate, such as 1 kHz, is too much?

Why am I asking these questions? Because the implementation would require work and FPGA resources. The challenge is that the JS220 will be implementing downsampling on-instrument to reduce the USB data rate and better allow for multiple Joulescopes on the same USB root hub. However, 1 Msps => 2**6 * 5 ** 6, but 60 Hz = 3 * 2 ** 2 * 5. That factor of 3 is not common to the 1 Msps, which means something needs to upsample or interpolate. This is an additional computation and complication that is relatively easy to implement on the host computer, but more work in the JS220 FPGA. It also would mean significant changes to our in-progress downsampling FPGA work ![]()

Hi Matt,

When data is exported from the Widget (e.g. to process in a spreadsheet), having a sample rate that has an integer number of samples per power line cycle allows using a simple average or sum. If the number of samples is not integer, averaging over a cycle requires using weights which requires a lot more processing if the sample set is large. That is the main crux of my use case.

Snapping cursors isn’t really what I’m looking for, I wouldn’t object to it, but it’s not the main thing I’m looking for.

The nominal sampling rate information is helpful, and if there’s no way to make the device sample natively, I don’t see any issues with resampling on the host.

- For my needs, the error for dropping or doubling one sample of 33k333 would not be a problem. Very spikey / high crest-factor data might have a higher error than the sample rate would imply, but for my needs it would be close enough.

- I mainly want to reduce .jls file sizes, and the resulting size of spreadsheets. I frequently record for hours, and I occasionally need to do multi-day recordings.

Thanks for the additional detail @Jeremy. I think that I have a much better understanding. You want to downsample JLS files to reduce storage requirements without having increased error due to quantized sampling periods, and you would rather not perform interpolation to help minimize this error.

I have opinions on doing analysis in Excel rather than Python. Excel is a great tool, but Python is much more powerful for data analysis, especially for larger data sets. If Python-only is not the answer for you, perhaps using Python to generate an Excel report could be.

I am very interested to see one of your Excel workbooks. Is this something you could share publically here or privately through DM?